BERT is being hailed as the biggest change in search since Google released RankBrain five years ago. That fanfare is warranted because it’s going to change the results that currently rank for one out of every ten search queries.

The bottom line is that Google is becoming smarter about search. And that means so should you.

Here’s what you need to know about BERT and how it’s going to change your approach to your website content.

What is the Google BERT Update?

Let’s start with the basics.

In technical-speak, BERT is short for Bidirectional Encoder Representations from Transformers. It is Google’s neural network-based technique for natural language processing (NLP) pre-training.

In lay terms, it means that BERT is a search algorithm that is going to help computers understand language more like humans do.

BERT was first open-sourced in 2018 and started rolling out just a few weeks ago. The initial rollout is for English language queries, but BERT will expand to other languages over time.

Five years ago, RankBrain launched and was Google’s first artificial intelligence method for understanding queries. Google designed RankBrain to look at queries and the content of the webpages in Google’s index to better understand the meanings of words.

BERT does not replace RankBrain. It’s an additional way to understand the nuances and context of queries and content so that they can be matched with better and more relevant results.

RankBrain will still be used for queries, but when Google determines that a query is understood better by using BERT, it will incorporate BERT’s methodologies. Depending on the nature of an individual query, Google may actually use several different kinds of methods, including BERT, to understand a query the best way possible.

With the BERT update, Google is now more inclusive in attempting to understand what the language of a query means and how it relates to existing content on the web. Currently, if you misspell a word in a query, Google’s spelling systems will find the right word for you. Also, if you use a synonym for an actual word that’s in relevant documents, Google can match those as well.

This new algorithm handles tasks such as entity recognition, part of speech tagging, and question-answering as well as other natural language processes. Also, because Google has open-sourced this technology, others have created variations of BERT.

BERT adds to existing query tools and creates another way for Google to understand language. Depending on the nature of your query, Google will take one or several of the tools used to decipher a search and provide the most relevant result.

BERT will also impact featured snippets. Featured snippets are a format that provides users with a concise answer to their questions directly on a search results page. As such, users do not have to click through to a specific result. It’s extracted from a webpage and includes the page’s title and URL.

Featured snippets provide several benefits to sites that use them effectively. A featured snippet proves that Google chose your page over others as the most relevant to a user’s query. It allows a site to be the quick answer to a specific question, thus beating the competition in terms of visibility and ranking. Because featured snippets provide a visibility advantage, they can also increase traffic to a particular site by as much as 30%.

How BERT will understand searches better

Google research has shown that longer and more conversational searches containing prepositions like “for” and “to” matter a lot to the meaning.

BERT accounts for the context of these words in a query. That means users can now search in a way that feels more natural, knowing the results will be more relevant than ever before.

Google did extensive testing to ensure that the BERT changes are more helpful to relevant search results.

As part of their rollout announcement, Google cited examples of their evaluation process that demonstrated BERT’s ability to understand a user’s intent behind their search.

Let’s take a closer look at some of those examples:

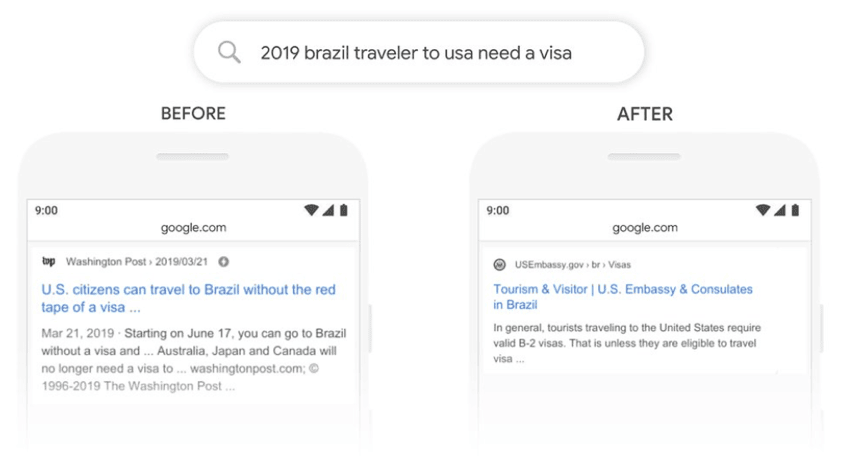

Search for “2019 Brazil traveler to USA needs a visa”

The word “to” and it’s relationship with other words in the query are especially crucial to understanding the overall meaning of the query. Previously, Google’s algorithms would not understand the significance of the Brazilians traveling “to” the United States and would also deliver search results about U.S. citizens traveling to Brazil.

With BERT, this is no longer the case, because it can understand the nuance of “to” in the broader context of the query.

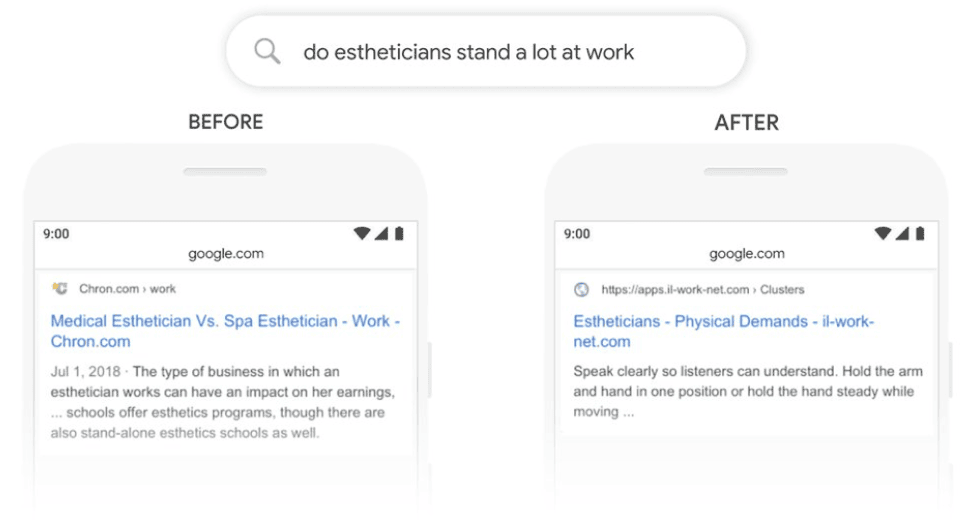

Search for “do estheticians stand a lot at work”

Previously, Google’s systems tied search queries to matching keywords. In this case, before BERT, Google would have matched “stand-alone” as part of the result with the word “stand” in the query.

BERT recognizes that this is not the proper use of “stand” in this query. Instead, BERT understands that “stand” is related to the physical demands of an esthetician’s job. As a result, it displays more useful results.

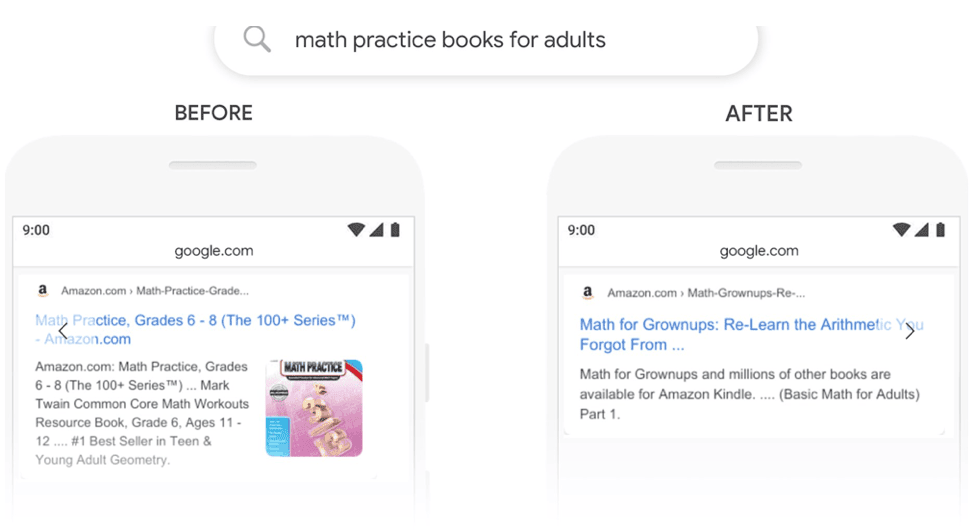

Search for “math practice books for adults”

Previously, Google’s search results for this would have included results in the “young adult” category. But BERT can understand that “adult” is being matched differently and can help pick a more relevant and targeted result.

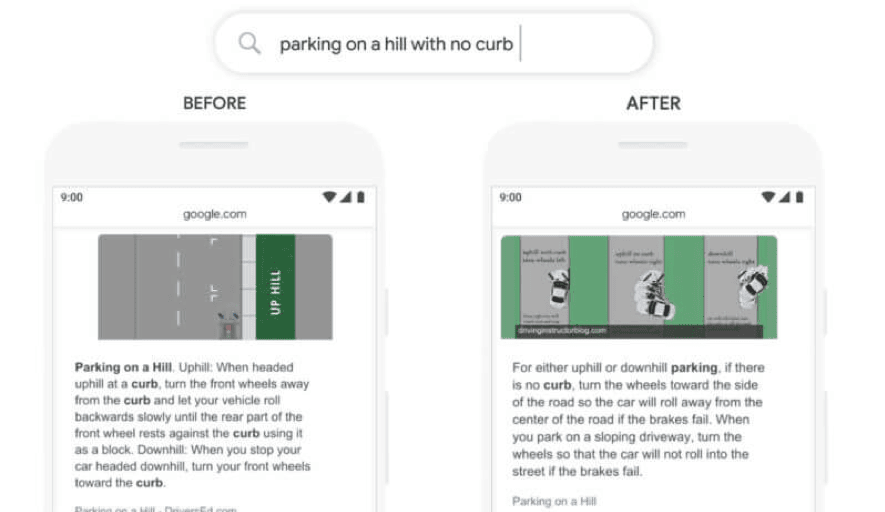

Featured snippet search for “parking on a hill with no curb”

BERT’s implementation also impacts more relevant featured snippets. In this example, “parking on a hill with no curb” is a query that would confuse Google’s systems in the past. Too much emphasis was placed on “curb” and not enough on “no” and that skewed results.

Instead of “no curb,” results would be returned that featured “parking on a hill with a curb.” The importance of the word “no” was not taken into account before BERT coming online.

Featured snippet results are not only improving in English but in many other languages as well. BERT is being used to improve featured snippets in more than two dozen countries where this feature is available. Google already sees significant improvements in languages like Korean, Hindi, and Portuguese.

The importance of pre-training data

Natural language processing (NLP) relies on training data to help create the best possible search results. One of the biggest challenges is the shortage of training data to better complete this task. Most task-specific datasets only contain relatively small quantities of human-labeled training examples. Sometimes this may range from a few thousand examples to a few hundred thousand samples.

Modern learning-based NLP models work best when there are more substantial amounts of data. Results improve when there are millions or even billions of annotated training examples.

To help close this gap and make NLP more relevant and useful, researchers have developed several techniques that use large amounts of unannotated text on the web. That is known as pre-training. Pre-trained models are then fine-tuned on small data NLP tasks that can result in substantial improvements in search accuracy when compared to datasets that are generated from scratch.

Pre-trained representations can either be context-free or contextual. Why is this important? It means that words can either be stand-alone or related to each other in the context of a search.

Contextual representations can either be unidirectional or bidirectional. The best results are produced when bidirectional methods are employed.

Let’s take a look at how that would work.

- As an example, the word “bank” has the same context-free representation in “bank account” and “bank of the river.”

- Contextual models generate a representation of each word that is based on the other words in the sentence. that means, in the sentence “I accessed the bank account,” a unidirectional contextual model would represent “bank” based on “I accessed the” but not “account.”

- However, BERT represents “bank” using both its previous and next word context.

- “I accessed the” and “account” are both used. That creates a bidirectional neural network.

- The concept of bidirectionality has been around for a long time in theory, but BERT is the first time a tool has been successfully used to pre-train a deep neural network.

- Also, in previous generations of search queries, pronouns have been problematic. BERT helps resolve this problem quite a bit due to its bidirectional nature.

How does BERT impact SEO?

With the rollout of BERT, Google advises users to let go of some of their keyword phobias and search in ways that feel more natural. BERT is a vast improvement, but ultimately, it comes down to using intuition and inputting useful queries to help BERT, RankBrain, and other tools to deliver the best results.

It’s also important to note that BERT can take Search learning from one language and apply it to another. Models that learn from improvements in English can be applied in such a way that delivers more relevant search results in many languages offered in Search.

Ultimately, search engine results are only as good as the person conducting the search. While BERT makes great strides in making this a more natural and intuitive process, it’s still essential to input the best possible search terminology at the outset of a query.

Also, even though BERT and related improvements upgrade to the state-of-the-art on 11 NLP tasks, it still won’t help poorly written websites. Well written content remains as important as ever

On-page SEO becomes even more important, and using terms in precise ways achieves the best results for your content. The BERT update will not help sloppy content.

With BERT, it’s not always going to be about creating a lengthy page that talks about 100 different things that are 5,000 words long.

You need to take into account that this update will be more aligned with answering a searcher’s question as quickly as possible and providing as much or more value in comparison to your competition.

What can you do to improve your website’s rankings after BERT?

The old adage of “garbage in, garbage out” applies to the BERT update.

If you generate poorly written “garbage” content at the outset, expect to see “garbage” search results that will negatively impact your standing as a result.

BERT is merely trying to understand better natural language, and the better your natural language is, and how it is focused and relatable on a particular topic, the better results you’ll see.

This update is designed to better respond to the true intent of the searcher’s query, and then match it to the best possible and most relevant results.

As BERT is implemented, the best thing you can do is monitor your traffic over the coming days and weeks. If you have content that is well written and answers the questions of your target audience, you should be just fine.

If you notice a drop-off, dig deeper into the impacted landing pages as well as for which queries. You may see those pages didn’t convert because the search traffic Google sent to those pages was not deemed as useful.

Based on what you discover, determine what is currently ranking now. Search queries in Google to see if it is a different content type or if a slightly different question is now being answered.

If that’s the case, consider strongly adjusting your content to reflect better how BERT is impacting your site. BERT’s impact means that slight modifications and simple words in a search query can dramatically alter the search intent.

Just like with RankBrain, according to Google, SEOs are not likely to be able to optimize for BERT. Their best advice is to continue to simply write good content for users. While content developers need to understand the impact BERT has on searches and queries going forward, ultimately this is Google’s effort to understand natural language better.

NOTE: Screenshots listed above were taken from Google’s blog on BERT